- Published on

AWS Serverless Image Processing Architecture 🖼️

- Authors

- Name

- Chengchang Yu

- @chengchangyu

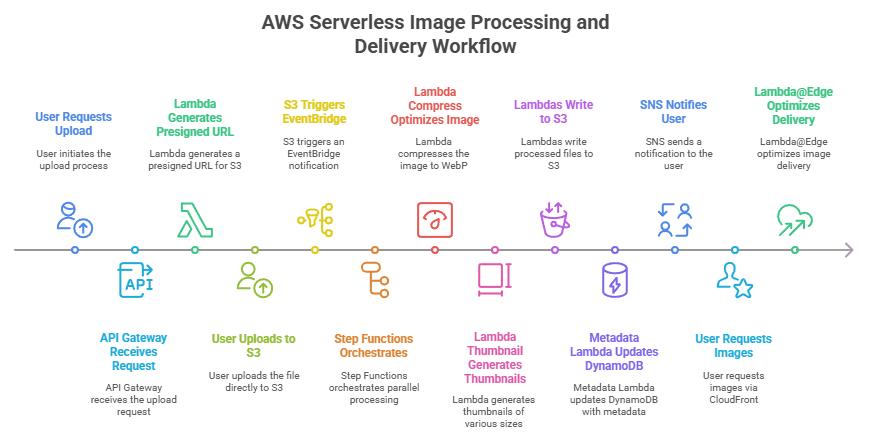

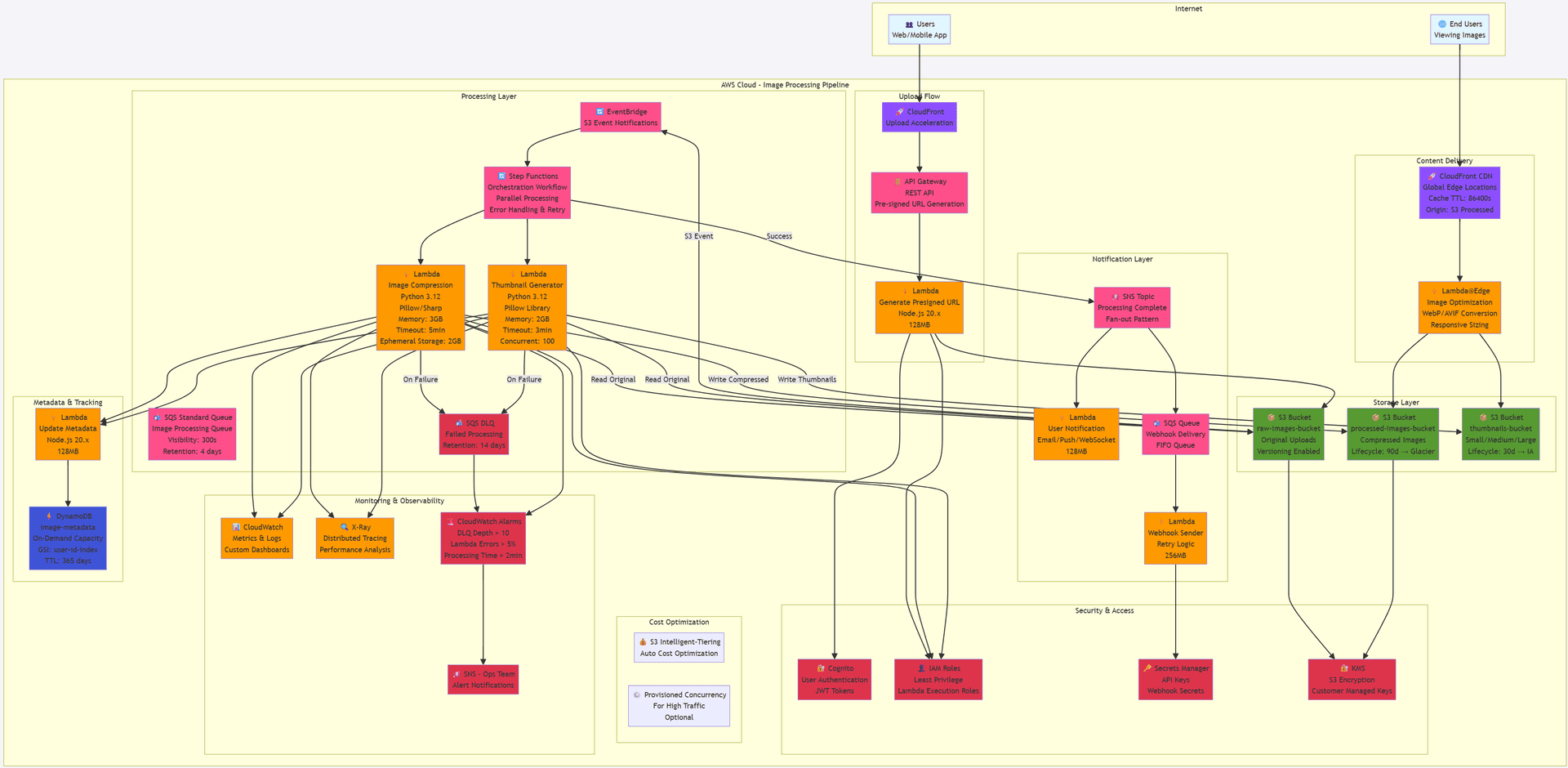

AWS Serverless Image Processing and Delivery Workflow

How would you design a serverless image processing service that automatically compresses and generates thumbnails after users upload to S3, while scaling to handle 1M+ images per day at minimal cost per image?

Automated Image Compression & Thumbnail Generation Pipeline (generated via Claude Sonnet 4.5)

Architecture Flow 🔄

Upload Flow (Step 1-3)

1. User requests upload → API Gateway

2. Lambda generates presigned S3 URL (valid 15 minutes)

3. User uploads directly to S3 (bypassing Lambda for large files)

Processing Flow (Step 4-8)

4. S3 triggers EventBridge notification

5. Step Functions orchestrates parallel processing:

├─ Lambda Compress: Optimize image (JPEG/PNG → WebP, 80% quality)

└─ Lambda Thumbnail: Generate 3 sizes (150x150, 300x300, 600x600)

6. Both Lambdas write to respective S3 buckets

7. Metadata Lambda updates DynamoDB with:

- Original size, compressed size, savings %

- Thumbnail URLs

- Processing timestamp

8. SNS notifies user of completion

Delivery Flow (Step 9-10)

9. End users request images via CloudFront

10. Lambda@Edge optimizes on-the-fly:

- Detects browser support (WebP/AVIF)

- Returns appropriate format

- Caches at edge locations

Step Functions Workflow Definition 📋

{

"Comment": "Image Processing Workflow",

"StartAt": "Parallel Processing",

"States": {

"Parallel Processing": {

"Type": "Parallel",

"Branches": [

{

"StartAt": "Compress Image",

"States": {

"Compress Image": {

"Type": "Task",

"Resource": "arn:aws:lambda:REGION:ACCOUNT:function:ImageCompressor",

"Retry": [

{

"ErrorEquals": ["States.TaskFailed"],

"IntervalSeconds": 2,

"MaxAttempts": 3,

"BackoffRate": 2

}

],

"Catch": [

{

"ErrorEquals": ["States.ALL"],

"Next": "Compression Failed"

}

],

"End": true

},

"Compression Failed": {

"Type": "Fail",

"Error": "CompressionError",

"Cause": "Image compression failed after retries"

}

}

},

{

"StartAt": "Generate Thumbnails",

"States": {

"Generate Thumbnails": {

"Type": "Task",

"Resource": "arn:aws:lambda:REGION:ACCOUNT:function:ThumbnailGenerator",

"Retry": [

{

"ErrorEquals": ["States.TaskFailed"],

"IntervalSeconds": 2,

"MaxAttempts": 3,

"BackoffRate": 2

}

],

"Catch": [

{

"ErrorEquals": ["States.ALL"],

"Next": "Thumbnail Failed"

}

],

"End": true

},

"Thumbnail Failed": {

"Type": "Fail",

"Error": "ThumbnailError",

"Cause": "Thumbnail generation failed after retries"

}

}

}

],

"Next": "Update Metadata"

},

"Update Metadata": {

"Type": "Task",

"Resource": "arn:aws:lambda:REGION:ACCOUNT:function:MetadataUpdater",

"Next": "Send Notification"

},

"Send Notification": {

"Type": "Task",

"Resource": "arn:aws:states:::sns:publish",

"Parameters": {

"TopicArn": "arn:aws:sns:REGION:ACCOUNT:ImageProcessingComplete",

"Message.$": "$.notificationMessage"

},

"End": true

}

}

}

Lambda Function Details 💻

1. Presigned URL Generator

# lambda_presign.py

import boto3

import json

from datetime import datetime, timedelta

s3_client = boto3.client('s3')

def lambda_handler(event, context):

user_id = event['requestContext']['authorizer']['claims']['sub']

filename = event['queryStringParameters']['filename']

content_type = event['queryStringParameters']['contentType']

# Generate unique key

timestamp = datetime.now().strftime('%Y%m%d_%H%M%S')

key = f"uploads/{user_id}/{timestamp}_{filename}"

# Generate presigned URL (15 min expiry)

presigned_url = s3_client.generate_presigned_url(

'put_object',

Params={

'Bucket': 'raw-images-bucket',

'Key': key,

'ContentType': content_type

},

ExpiresIn=900

)

return {

'statusCode': 200,

'body': json.dumps({

'uploadUrl': presigned_url,

'key': key

})

}

2. Image Compression Lambda

# lambda_compress.py

import boto3

from PIL import Image

import io

import os

s3_client = boto3.client('s3')

def lambda_handler(event, context):

bucket = event['detail']['bucket']['name']

key = event['detail']['object']['key']

# Download original image

response = s3_client.get_object(Bucket=bucket, Key=key)

image_data = response['Body'].read()

# Open and compress

img = Image.open(io.BytesIO(image_data))

# Convert to RGB if necessary

if img.mode in ('RGBA', 'LA', 'P'):

img = img.convert('RGB')

# Compress to WebP format

output_buffer = io.BytesIO()

img.save(output_buffer, format='WEBP', quality=80, optimize=True)

output_buffer.seek(0)

# Upload compressed image

compressed_key = key.replace('uploads/', 'compressed/')

s3_client.put_object(

Bucket='processed-images-bucket',

Key=compressed_key,

Body=output_buffer,

ContentType='image/webp',

CacheControl='max-age=31536000'

)

original_size = len(image_data)

compressed_size = output_buffer.getbuffer().nbytes

savings = ((original_size - compressed_size) / original_size) * 100

return {

'originalKey': key,

'compressedKey': compressed_key,

'originalSize': original_size,

'compressedSize': compressed_size,

'savingsPercent': round(savings, 2)

}

3. Thumbnail Generator Lambda

# lambda_thumbnail.py

import boto3

from PIL import Image

import io

s3_client = boto3.client('s3')

THUMBNAIL_SIZES = {

'small': (150, 150),

'medium': (300, 300),

'large': (600, 600)

}

def lambda_handler(event, context):

bucket = event['detail']['bucket']['name']

key = event['detail']['object']['key']

# Download original

response = s3_client.get_object(Bucket=bucket, Key=key)

image_data = response['Body'].read()

img = Image.open(io.BytesIO(image_data))

thumbnail_urls = {}

for size_name, dimensions in THUMBNAIL_SIZES.items():

# Create thumbnail

thumb = img.copy()

thumb.thumbnail(dimensions, Image.Resampling.LANCZOS)

# Save to buffer

buffer = io.BytesIO()

thumb.save(buffer, format='JPEG', quality=85, optimize=True)

buffer.seek(0)

# Upload to S3

thumb_key = f"thumbnails/{size_name}/{key.split('/')[-1]}"

s3_client.put_object(

Bucket='thumbnails-bucket',

Key=thumb_key,

Body=buffer,

ContentType='image/jpeg',

CacheControl='max-age=31536000'

)

thumbnail_urls[size_name] = f"https://cdn.example.com/{thumb_key}"

return {

'originalKey': key,

'thumbnails': thumbnail_urls

}

DynamoDB Schema 📊

Table: image-metadata

{

"TableName": "image-metadata",

"KeySchema": [

{ "AttributeName": "imageId", "KeyType": "HASH" },

{ "AttributeName": "uploadedAt", "KeyType": "RANGE" }

],

"AttributeDefinitions": [

{ "AttributeName": "imageId", "AttributeType": "S" },

{ "AttributeName": "uploadedAt", "AttributeType": "N" },

{ "AttributeName": "userId", "AttributeType": "S" }

],

"GlobalSecondaryIndexes": [

{

"IndexName": "user-id-index",

"KeySchema": [

{ "AttributeName": "userId", "KeyType": "HASH" },

{ "AttributeName": "uploadedAt", "KeyType": "RANGE" }

],

"Projection": { "ProjectionType": "ALL" }

}

],

"BillingMode": "PAY_PER_REQUEST",

"TimeToLiveSpecification": {

"Enabled": true,

"AttributeName": "expiresAt"

}

}

Sample Item

{

"imageId": "img_abc123",

"userId": "user_xyz789",

"uploadedAt": 1704067200,

"originalKey": "uploads/user_xyz789/20240101_120000_photo.jpg",

"compressedKey": "compressed/user_xyz789/20240101_120000_photo.webp",

"thumbnails": {

"small": "https://cdn.example.com/thumbnails/small/photo.jpg",

"medium": "https://cdn.example.com/thumbnails/medium/photo.jpg",

"large": "https://cdn.example.com/thumbnails/large/photo.jpg"

},

"originalSize": 5242880,

"compressedSize": 1048576,

"savingsPercent": 80.0,

"processingTimeMs": 2340,

"status": "completed",

"expiresAt": 1735689600

}

Cost Analysis 💰

Monthly Cost Estimate (10,000 images/month)

| Service | Usage | Cost |

|---|---|---|

| S3 Storage | 50GB raw + 20GB processed | $1.61 |

| S3 Requests | 10K PUT + 100K GET | $0.55 |

| Lambda Invocations | 30K invocations (3 per image) | $0.60 |

| Lambda Duration | 90K GB-seconds | $1.50 |

| Step Functions | 10K state transitions | $0.25 |

| DynamoDB | 10K writes, 50K reads | $1.25 |

| CloudFront | 100GB transfer | $8.50 |

| EventBridge | 10K events | $0.10 |

| SNS | 10K notifications | $0.50 |

| CloudWatch Logs | 5GB logs | $2.50 |

| Total | ~$17.36/month |

Cost per image processed: ~$0.0017

Performance Metrics 📈

Target SLAs

- Upload URL Generation: < 200ms

- Image Compression: < 3 seconds (for 5MB image)

- Thumbnail Generation: < 2 seconds

- Total Processing Time: < 5 seconds (P95)

- CDN Cache Hit Rate: > 95%

Scalability

- Concurrent Processing: 1,000 images simultaneously

- Daily Capacity: 1M+ images

- Auto-scaling: Automatic based on queue depth

- Global Delivery: < 100ms latency (via CloudFront)

Monitoring & Alerts 🚨

CloudWatch Alarms

Alarms:

- Name: HighDLQDepth

Metric: ApproximateNumberOfMessagesVisible

Threshold: 10

Action: SNS notification to ops team

- Name: LambdaHighErrorRate

Metric: Errors

Threshold: 5% of invocations

Period: 5 minutes

- Name: SlowProcessing

Metric: Duration

Threshold: 120 seconds (P95)

- Name: HighS3Costs

Metric: EstimatedCharges

Threshold: $50/day

Custom Metrics

- Image processing success rate

- Average compression ratio

- Storage savings (GB/month)

- Processing time by image size

- Thumbnail generation failures

Security Best Practices 🔐

1. S3 Bucket Policies

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Principal": "*",

"Action": "s3:*",

"Resource": "arn:aws:s3:::raw-images-bucket/*",

"Condition": {

"Bool": {

"aws:SecureTransport": "false"

}

}

},

{

"Effect": "Allow",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Action": ["s3:GetObject", "s3:PutObject"],

"Resource": "arn:aws:s3:::raw-images-bucket/*"

}

]

}

2. IAM Lambda Execution Role

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": "arn:aws:s3:::raw-images-bucket/*"

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::processed-images-bucket/*",

"arn:aws:s3:::thumbnails-bucket/*"

]

},

{

"Effect": "Allow",

"Action": [

"dynamodb:PutItem",

"dynamodb:UpdateItem"

],

"Resource": "arn:aws:dynamodb:*:*:table/image-metadata"

},

{

"Effect": "Allow",

"Action": [

"kms:Decrypt",

"kms:GenerateDataKey"

],

"Resource": "arn:aws:kms:*:*:key/*"

}

]

}

3. Encryption

- S3: Server-side encryption with KMS (SSE-KMS)

- DynamoDB: Encryption at rest enabled

- Secrets Manager: Webhook secrets encrypted

- CloudFront: HTTPS only (TLS 1.2+)

Disaster Recovery & Backup 🔄

Backup Strategy

S3 Versioning:

- Enabled on raw-images-bucket

- Retain 30 versions

- Lifecycle: Delete after 90 days

Cross-Region Replication:

- Replicate processed images to us-west-2

- For disaster recovery

- Compliance requirements

DynamoDB Backups:

- Point-in-time recovery enabled

- Automated daily backups

- Retention: 35 days

Recovery Objectives

- RTO (Recovery Time Objective): < 1 hour

- RPO (Recovery Point Objective): < 5 minutes

- Data Durability: 99.999999999% (S3 standard)

Optimization Tips 🚀

1. Lambda Performance

- Use ARM64 (Graviton2) for 20% cost savings

- Enable Lambda SnapStart for Java/Python (faster cold starts)

- Use /tmp ephemeral storage for large image processing

- Implement connection pooling for DynamoDB

2. Cost Optimization

- Enable S3 Intelligent-Tiering for automatic cost optimization

- Use S3 Lifecycle policies to move old images to Glacier

- Implement CloudFront caching to reduce S3 GET requests

- Use Reserved Concurrency only for critical functions

3. Scalability

- Implement SQS batching (process 10 images per Lambda invocation)

- Use Step Functions Express for high-volume workflows

- Enable DynamoDB auto-scaling for unpredictable traffic

- Consider EFS for shared image processing libraries